I found myself in a situation where I needed to modify temporal tables. We do this outside business hours because we don’t want clients changing data while versioning is off. Who wants to wait until off-hours to run scripts if you could devise a way to do it during work hours? This post walks you through how to change temporal tables without losing track of what is changing.

What are Temporal Tables?

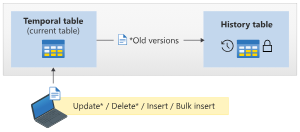

According to Microsoft, temporal tables are designed to keep a full history of data changes, allowing easy point-in-time analysis. The current table contains the current value for each row. The history table contains each previous value (the old version) for each row, if any, and the start time and end time for the period for which it was valid.

Use cases for temporal tables include:

- Auditing all data changes and performing data forensics when necessary

- Reconstructing the state of the data as of any time in the past

- Calculating trends over time

- Maintaining a slowly changing dimension for decision support applications

- Recovering from accidental data changes and application errors

My Situation

My first message to you is to never be in this situation. What is this situation? Using temporal tables for reporting. If you use the temporal table for reporting, you may have to clean up erroneous data in the temporal table. We are building a data warehouse to move reporting out of OLTP databases.

I would never use a temporal table for reporting. Now that we have to regularly fix data in temporal tables, I can see why I would never do that. You don’t want to miss data changing in the current table while table versioning is off. However, you must turn off versioning to change temporal table data.

You can block changes to the current table while changing the temporal table to avoid missing changes to the current table. This means no one else can change any data while you run the transaction to make your data changes because you are blocking on purpose. This does not scale. We have a very small environment. Also, turning off temporal tables is as easy as dropping an index. However, it takes time to turn it back on, just like adding an index takes time.

Setup The Scenario

Let’s set up a temporal table with the following script:

-- Create the WebsiteUserInfo table with auto-incrementing ID

CREATE TABLE WebsiteUserInfo

(

[ID] INT IDENTITY(1,1) NOT NULL PRIMARY KEY,

[UserID] INT NOT NULL,

[UserName] NVARCHAR(100) NOT NULL,

[PagesVisited] INT NOT NULL,

[ValidFrom] DATETIME2 (0) GENERATED ALWAYS AS ROW START,

[ValidTo] DATETIME2 (0) GENERATED ALWAYS AS ROW END,

PERIOD FOR SYSTEM_TIME (ValidFrom, ValidTo)

)

WITH (SYSTEM_VERSIONING = ON (HISTORY_TABLE = dbo.WebsiteUserInfoHistory));

-- Generate 100,000 rows for the table

DECLARE @Counter INT = 1;

WHILE @Counter <= 100000

BEGIN

INSERT INTO WebsiteUserInfo (UserID, UserName, PagesVisited)

VALUES (@Counter, 'User ' + CAST(@Counter AS NVARCHAR), @Counter);

SET @Counter = @Counter + 1;

ENDNow we have the table and some data in place. Depending on your SQL Server/Azure SQL database specs, that data might take a while to load. I ran this on Azure SQL Basic, and it took a while. I wanted to add enough rows to make this a somewhat sizable table, not just a few rows.

Modify Your Current Table and Its History Table

We will step through the entire transaction block statement by statement to test whether this works. You could run it all at once, but we want to see the lock blocking the insert to prove this blocks it. Start by executing the following query in one query window:

-- Assuming you want to delete rows with ID values 1 to 10

BEGIN TRANSACTION

DELETE FROM WebsiteUserInfo WITH (TABLOCK)

WHERE ID IN (1, 2, 3, 4, 5, 6, 7, 8, 9, 10);

After you execute the begin transaction and first delete statement above, you will want to run your insert statement on the current table. Execute the following query in another query window:

-- Generate 100,000 rows for the table

DECLARE @Counter INT = 1;

WHILE @Counter <= 100000

BEGIN

INSERT INTO WebsiteUserInfo (UserID, UserName, PagesVisited)

VALUES (@Counter, 'User ' + CAST(@Counter AS NVARCHAR), @Counter);

SET @Counter = @Counter + 1;

ENDNext, we can run the rest of the block in the first query window with the BEGIN TRANSACTION statement. The rest of the query block is included below:

ALTER TABLE [dbo].[WebsiteUserInfo]

SET (SYSTEM_VERSIONING = OFF)

GO

DELETE FROM WebsiteUserInfoHistory

WHERE ID IN (1, 2, 3, 4, 5, 6, 7, 8, 9, 10);

ALTER TABLE [dbo].[WebsiteUserInfo]

SET

(SYSTEM_VERSIONING = ON (HISTORY_TABLE = [dbo].[WebsiteUserInfoHistory]));

COMMIT TRANSACTION Once you commit the transaction, that will free up the TABLOCK on the current table and allow inserts in your other query window. With the current table and its history table all in the same transaction, you will prevent other users from changing data in the current table. This way, you won’t miss changes logged to the history table. This will cause blocking, though, so keep that in mind if you are worried about excessive blocking during business hours. Our tables are small, so it’s not an issue for us, and it’s less than 10 seconds.

For the Future

In the future, you could run the entire transaction without stepping through each statement. Note: You need the GO after the ALTER TABLE statement to turn versioning off. You will get an error without that GO when executing the transaction without stepping through each statement. The entire statement is included below:

-- Assuming you want to delete rows with ID values 1 to 10

BEGIN TRANSACTION

DELETE FROM WebsiteUserInfo WITH (TABLOCK)

WHERE ID IN (1, 2, 3, 4, 5, 6, 7, 8, 9, 10);

ALTER TABLE [dbo].[WebsiteUserInfo]

SET (SYSTEM_VERSIONING = OFF)

GO

DELETE FROM WebsiteUserInfoHistory

WHERE ID IN (1, 2, 3, 4, 5, 6, 7, 8, 9, 10);

ALTER TABLE [dbo].[WebsiteUserInfo]

SET

(SYSTEM_VERSIONING = ON (HISTORY_TABLE = [dbo].[WebsiteUserInfoHistory]));

COMMIT TRANSACTION Conclusion

As I mentioned, if your temporal table is large, this will be a terrible plan for you. However, this code will work if you have small temporal tables. Also, I recommend not reporting on temporal tables because they aren’t meant to be modified if reporting data needs to change to reflect an accurate portrayal of the data.

I use (auto created) triggers for auditing. And each trigger starts with

IF @@ROWCOUNT = 0 OR CONTEXT_INFO() = 0x1000 RETURN;

This way I can turn it off just for a single (maintenance) session by executing SET CONTEXT_INFO 0x1000 at the start of my cleanup stuff to bypass the whole trigger logic.

With temporal tables this not possible of course (but maybe someone stumbles over your article, who hasn’t already decided, how to do the auditing).

Could you not move the relevant temporal tables into a different filegroup and make that FG read_only for the purpose of the update and then back to R/W?

This comment is meant as a general ‘raising my finger’ when considering temporal tables

As you are explaining, Temporal tables can be tricky to do maintenance on.

Even more so when the OLTP databases are using Temporal tables, as is the case at my client.

They had numerous legacy solution that needed to be rewritten and rearchitectured.

for example, each solution had its own different way of handling history, so it was decided that all new solutions, should use Temporal tables.

This decision is causing some grief in the maintenance of data.

On top of normal datamaintenance, with GDPR rules, where we are required to remove data as it gets to a certain age, customers/citizens can require to be removed totally from the datasets etc.

So we have batchjobs, misc. scripts and other stuff running to achieve this, in general using the same method as you are describing.

Since ours is a 24/7 OLTP environment with millions of rows, we have to maintain the changes, one row/customer/citizen at the time, otherwise we would block others for too long, and they will get timeouts etc.

With millions of rows, the part where you turn SYSTEM_VERSIONING on again, can take quite some time

That is because it by default checks if the consistency between the ValidFrom and ValidTo is valid for all records.

This can be changed by adding the DATA_CONSISTENCY_CHECK flag, when turning SYSTEM_VERSIONING on, and telling not to check consistency:

ALTER TABLE [dbo].[WebsiteUserInfo] SET (SYSTEM_VERSIONING = ON (HISTORY_TABLE = [dbo].[WebsiteUserInfoHistory], DATA_CONSISTENCY_CHECK = OFF ));

In our case where we are almost always deleting all of the data belonging to the primary key in the both tables, we can do this safely.

We have rare datamaintenance scripts that needs to handle more complex changes, sometimes including the ValidFrom and ValidTo columns

Therefore our scripts looks something like what you see below

We have also a section that checks that the system versioning is turned on after the operation, otherwise it can cause all kinds of problems in the future, if your code relies on data found in the history table

(querying for specific periods og FOR SYSTEM_TIME ALL)

Since we are not doing DATA_CONSISTENCY_CHECK this has happened because of an error in the maintenance operation,

— Do some Pre change operations that does not need to be inside the transaction

BEGIN TRAN

— turn off System Versioning

ALTER TABLE [dbo].[WebsiteUserInfo] SET ( SYSTEM_VERSIONING = OFF);

GO

— deaktivate SYSTEM TIME, ValidFrom, ValidTo can be updated if needed

ALTER TABLE [dbo].[WebsiteUserInfo] DROP PERIOD FOR SYSTEM_TIME

GO

— DO YOUR THING

–Reactivate SYSTEM_TIME

ALTER TABLE [dbo].[WebsiteUserInfo] ADD PERIOD FOR SYSTEM_TIME(ValidFrom, ValidTo);

GO

— turn on System Versioning

ALTER TABLE [dbo].[WebsiteUserInfo] SET ( SYSTEM_VERSIONING = ON ( HISTORY_TABLE = dbo.[WebsiteUserInfoHistory], DATA_CONSISTENCY_CHECK = OFF ));

GO

COMMIT TRAN

TRY CATCH

— check if systemversioning is turned back on, if not try again and report that it happened